Jobs

Jobs form a crucial component of any workflow. This document will describe the features of jobs as well as provide examples of using them.

Overview

Each workflow is made of a series of jobs, which are separate tasks that are to be performed on a runner. Using jobs we can:

- Run a series of command-line applications in the form of steps.

- Automate tools and applications.

- Form complex dependencies between jobs.

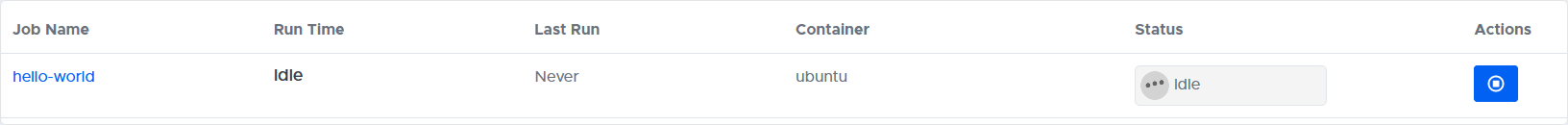

The jobs for a particular workflow may be found by clicking on the workflow in a project's page.

Status

Jobs may have a particular status:

| Status | Description |

|---|---|

| Passed | Job has completed successfully |

| Failed | Job has failed |

| Pending | Job is in progress |

| Idle | Job has not started |

Configuration Examples

Concurrent jobs

The following example shows a single workflow that will run two jobs in parallel.

By default, all jobs will run in parallel.

To create jobs, you need a jobs: section in bbx./config.yaml.

Each job will require a unique name, in the provided example the jobs have been named example-build and example-test.

Since each job is independent of the other, they will run in parallel.

runners:

example-runner:

image: work1-virtualbox:5000/ubuntu-generic

jobs:

example-job1:

runner: example-runner

steps:

- run:

name: Say Hello World 1

command: |

echo "Hello World 1"

example-job2:

runner: example-runner

steps:

- run:

name: Say Hello World 2

command: |

echo "Hello World 2"

workflows:

workflow-example:

jobs:

- example-job1

- example-job2

Dependent jobs

Jobs may require the completion of another job, such as when running a build and then testing that build.

Dependencies between jobs can be created through the depends.

The following example shows a single workflow that will run three jobs that depend on each other.

To create a dependency, you need to include a depends section in the job, followed by a list of the jobs the current job is dependent on.

In this case, example-test-1 and example-test-2 both depend on example-build.

Once example-build successfully completes, both the test jobs that depend on it can run concurrently (the two test jobs in this example do not depend on each other).

runners:

example-runner:

image: work1-virtualbox:5000/ubuntu-generic

jobs:

example-build:

runner: example-runner

steps:

- run:

name: Running a build

command: echo "Running a build"

example-test-1:

runner: example-runner

depends:

- example-build

steps:

- run:

name: Running a Test

command: echo "Running the first test"

example-test-2:

runner: example-runner

depends:

- example-build

steps:

- run:

name: Running a Test

command: echo "Running the second test"

workflows:

workflow-example:

jobs:

- example-build

- example-test-1

- example-test-2

Dependency conditions

When defining dependencies, you may also include conditions for the dependencies, to control how subsequent jobs are run. The details of the jobs in the config code below have been omitted for simplicity, and it shows how jobs can be defined to run based on the result of previous jobs.

jobs:

first-job:

second-job:

third-job:

fourth-job:

fifth-job:

depends:

- first-job

- second-job:

when: on-pass

- third-job:

when: on-fail

- fourth-job:

when: on-completion

In this example, before fifth-job can run:

first-jobmust pass.second-jobmust pass.third-jobmust fail.fifth-jobmust complete (either pass or fail).

If all the conditions for the dependencies are not met, then fifth-job will be skipped.

Privileged Jobs

There are some instances where the runner would require elevated privileges to access resources on the host machine. Such resources include, but is not limited to, USB ports, serial ports, and external hardware connected to the machine.

jobs:

example-job:

runner: example-runner

privileged: True

steps:

- run:

name: Running a step

command: echo "Running a step"

Accessing Devices

When accessing external devices using the built-in devices feature of BeetleboxCI, you will need to include the device which the job will access in the definition of the job. This will allow the job to use the the named device from the device registry.

jobs:

example-job:

runner: example-runner

device: zcu104

In many cases, accessing the device will require the job to be given elevated privileges. For instance, if the device is connected to the server via USB, such a device can only be accessed by a job that has elevated privileges. Since the jobs run on isolated containers, by default they do not have permissions to access the hardware devices that are attached to the server. Giving the runner elevated privileges is necessary in order for it to be able to access hardware that is connected to the server. Privileged access is not necessary for SSH communication.

jobs:

example-job:

runner: example-runner

privileged: True

device: raspberry-pi-pico

Outputting artifacts

Files that are generated by a job can be stored as an artifact.

The artifact is located relative to the current working directory, which by default ~/.

Absolute directories may also be used.

Artifacts that are outputted by a job are named after the job that produced it

In this snippet of config code, the file example-0.txt (which we assume has been produced by the job) is compressed into a tarball and outputted as an artifact named job-0

jobs:

job-0:

runner: example-runner

output:

artifact:

- example-0.txt

steps:

...

Inputting artifacts

Artifacts within may be used as inputs to jobs.

Within the job the key input: followed by the artifact: and a list of the artifacts is used to indicate the artifacts that are to be placed on the runner.

The location of where the artifact is placed on the runner is dependent upon the artifact type.

In the following code snippet, the artifact example-1.txt is used in the job job-1.

If the artifact is type miscellaneous, it will be placed in the current working directory in the runner, which by default is ~/.

jobs:

job-1:

runner: example-runner

input:

artifact:

- example-1.txt

steps:

...

You can also use the output artifact of one job as an input artifact to a subsequent job.

Where the artifact of one job is used in a subsequent job, the depends field is included to ensure that the first job has completed before its output is used by any subsequent job.

jobs:

job-0:

runner: example-runner

output:

artifact:

- example-0.txt

steps:

...

job-1:

runner: example-runner

depends:

- job-0

input:

artifact:

- job-0

steps:

...

Mounting Volumes

To use volumes within jobs, the user can include the volumes field, and then list the directories from the node to be mounted within the container. The container will use the exact same path for the directories inside the container, as on the node.

jobs:

random-job:

volumes:

- mount:

name: volume1

path: /tmp/firstvolume

- mount:

name: volume2

path: /tmp/secondvolume

- mount:

name: volume3

path: /opt/thirdvolume

Selecting Nodes

The user may specify that a job musst run on a particular node in a cluster.

This would be necessary if a job requires access to specific hardware on a particular node or to mount a specific directory from a particular node.

The node_selector value is a dict which much match the labels on a particular node, for a job to be placed on that node.

To view the labels of a particular node, run the command kubectl describe node [name-of-node].

The labels will be listed in the form key=value, but in the config.yaml, it must be written as key: value.

If this field is not specified, the job will automatically be placed onto any of the available nodes in the cluster.

jobs:

first-job:

node_selector:

kubernetes.io/hostname: name-of-node